Estimating the Ginormous Growth of Genomics Data and Storage

Genomic data science is a field of study that enables researchers to use powerful computational and statistical methods to decode the functional information hidden in DNA sequences. Our ability to sequence DNA has far outpaced our ability to decipher the information it contains, so genomic data science will be a vibrant field of research for many years to come.

Researchers are now generating more genomic data than ever before to understand how the genome functions and affects human health and disease. These data are coming from millions of people in various populations across the world. Much of the data generated from genome sequencing research must be stored, even if over time, older information is discarded. The demand for storage is colossal with increased and new areas of life sciences research.

New areas of life sciences research are driving up data volumes

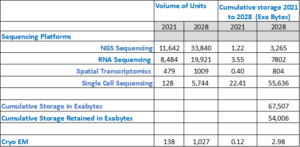

We identified four major life sciences research areas that are generating large volumes of data. For each area, we estimated the demand for the instruments that are producing this data. We then estimated average data generated per year by each of the instruments. The total storage demand over the study scope period is obtained by multiplying the number of sequencing instruments with the average data generated by each instrument.

Following areas of life sciences were considered in the data generation estimation:

Next-generation sequencing (NGS) is a massively parallel sequencing technology that offers ultra-high throughput, scalability, and speed.

RNA sequencing (RNA-Seq) uses the capabilities of high-throughput sequencing methods to provide insight into the transcriptome of a cell.

Spatial transcriptomics are methods designed for assigning cell types (identified by the mRNA readouts) to their locations in the histological sections. This method can also be used to determine subcellular localization of mRNA molecules.

Cryogenic electron microscopy (cryo-EM) is a cryo microscopy technique applied on samples cooled to cryogenic temperatures. This approach has attracted wide attention as an alternative to X-ray crystallography or NMR spectroscopy for macromolecular structure determination without the need for crystallization.

Single-cell sequencing technologies refer to the sequencing of a single-cell genome or transcriptome, so as to obtain genomic, transcriptome or other multi-omics information to reveal cell population differences and cellular evolutionary relationships.

How to quantify the colossal storage needs?

We made the following assumptions to estimate the storage needs between years 2021 through 2028.

- We assumed the information provided in the paper[1] “Big Data – astronomical or genomical” published in 2015 as the basis of our calculation. In the year 2015, the authors working with various subject matter experts surmised that in 2015, there were 2500 gene sequencing instruments and the storage need at that time was 9 Petabytes (PBs).

- Based on their and other research studies, they looked into two possibilities

- Storage needs will double every 12 months (conservative case)

- Storage needs will double every 7 months (aggressive case).

- In our estimation exercise, we assumed that the growth rate will be the average of the above two cases.

- Based on our discussion with experts, we assumed storage needs per RNA, Spatial transcriptomics, Single-cell sequencing instrument will be 4,8, and 100 times that of an NGS sequencing instrument respectively.

- We worked with a Grand View Research, a market research company to estimate the global volumes of NGS sequencing, RNA sequencing, Spatial transcriptomics, single cell sequencing and Cryo EM instruments for the period between 2021 thru 2028.

- The volume of each instrument type multiplied by unit storage requirement for each category provided the total storage need. We also assumed that each year, 80% of the data will be retained (i.e.,20% discarded).

- The following table provides a summary of our estimate.

From the table, we see that the need for storage for sequencing is around 54 Exabytes in 2028 (assuming a retention of 80%). For this exercise, we have made many assumptions re: growth, sequencing technologies, retention, and size based on what we know today. One thing is certain: storage needs for life sciences and genomics will only increase, and it will be ginormous.

[1] https://journals.plos.org/plosbiology/article?id=10.1371/journal.pbio.1002195