IBM – Building Momentum to Win the Hybrid Cloud Platform War

IBM – Building Momentum to Win the Hybrid Cloud Platform War

IBM – Building Momentum to Win the Hybrid Cloud Platform War

By Ravi Shankar and Srini Chari, PhD., MBA – Cabot Partners, May 8, 2020.

As the evolving impacts of COVID-19 ripple globally through our communities, the new IBM CEO Arvind Krishna kicked-off the virtual IBM Think conference on May 5, 2020 with an apt assertion: “There's no question this pandemic is a powerful force of disruption and an unprecedented tragedy, but it is also a critical turning point.” Krishna said “History will look back on this as the moment when the digital transformation of business and society suddenly accelerated, and together, we laid the groundwork for the post-COVID world”.

Originally set to be held in San Francisco, the IBM 2020 Think Digital Experience quickly became one of the many tech events held online. The attendance was very large. About 100,000 non-IBM participants registered and over 170,000 unique visitors attended sessions and consumed content. On average, IBM clients and Business Partners joined 6.5 sessions and watched most of those sessions. This was 3 times the number of clients and 2 times the number of Business Partners compared to last year.

Think Digital featured many key announcements and offered virtual attendees an exciting array of speaker sessions, real-time Q&As and technical training highlighting how hybrid cloud and artificial intelligence (AI) are galvanizing digital transformation, and how IBM is building an agile and scalable platform for developers, partners and clients to overcome data and applications migration challenges from the edge to the cloud.

Lift and Shift to the Public Cloud is Inadequate for Most Enterprise Workloads[i]

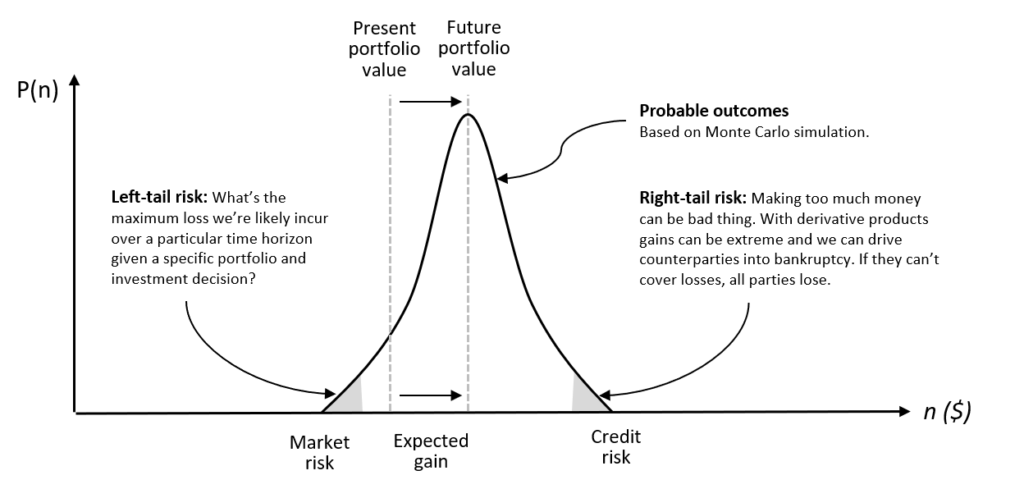

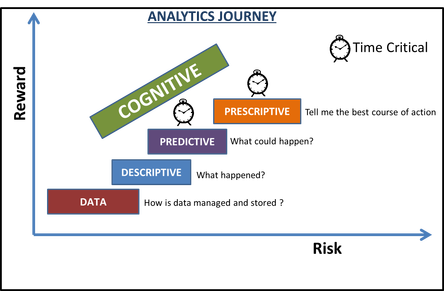

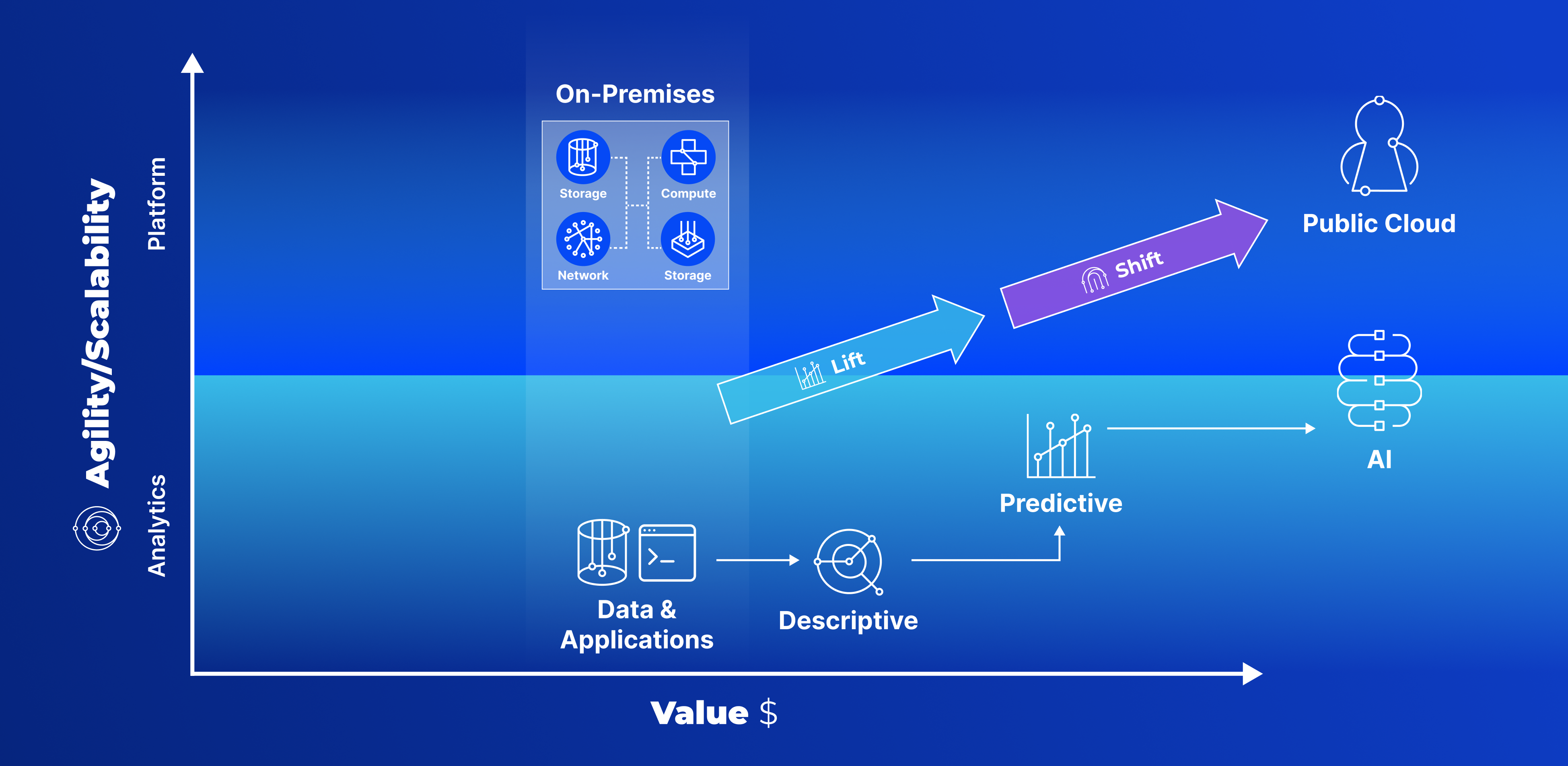

The simplest enterprise workloads – about 20% of all enterprise workloads – have already been moved to the public cloud and have benefited from greater agility and scalability. However, the remaining 80% of workloads continue to remain on-premises.1 Contrary to what many public cloud providers proclaim, traditional Lift and Shift (Figure 1) cloud transformation is not always economical and easy, especially for the analytics and AI journey.

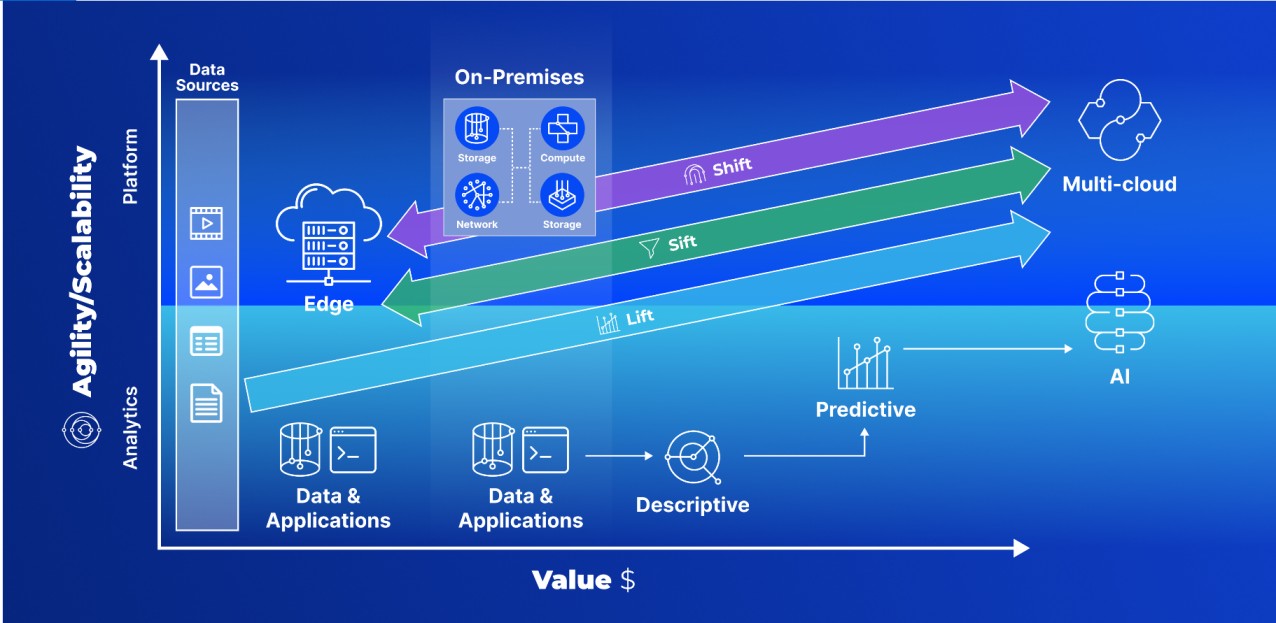

Figure 1: Traditional Lift and Shift of On-Premises Data and Applications to the Public Cloud is Inadequate

If public cloud migration were easy for many enterprise applications and data, most businesses would have already migrated most of their workloads to the public cloud and realized the associated benefits (Table 1). On-premises solutions still provide many benefits especially for analytics and AI (Table 1).

|

Public Cloud |

On-Premises |

|

· High scalability and flexibility for unpredictable workload demands with varying peaks/valleys · Rapid software development, test and proof of concept pilot environments · No capital investments required to deploy and maintain infrastructure · Faster provisioning time and reduced requirements on IT expertise as this is managed by the cloud vendor |

· Bring compute to where data resides since it is hard to move existing data lakes into the cloud because of large data volumes · Supports analytics at the edge and other distributed environments to make immediate decisions · Provides dedicated and secure environments for compliance to stringent regulations and/or unique workload requirements · High/custom SLA performance and efficiency · Retains the value of investments in existing solutions |

Table 1: Benefits of Public Cloud and On-Premises Infrastructures for Analytics/AI

To improve the agility and scalability of the remaining 80% of enterprise applications and data, it is possible, with hybrid clouds, to combine the benefits of a public cloud with that of an on-premises infrastructure.

The Hybrid Multi-Cloud Platform is the New Battleground

Over the last decade, the term “cloud wars” has been used to describe the competition between public cloud providers, AWS, Microsoft Azure, Google Cloud, IBM Cloud and a few others. But with 80% of the workloads still left on-premises, this is more a rift or a squabble than a war. The real “cloud war” is only beginning for rapidly-growing hybrid cloud platform – particularly for analytics and AI.

The worldwide data services for the hybrid cloud market is expected to grow at a healthy CAGR of 20.53% from 2016 to 2021[i] as enterprises prioritize a balance of public and private infrastructure. Only 31% of enterprises see public cloud as their top priority, while a combined 45% of enterprises see hybrid cloud as the future state.2

Today, large organizations leverage almost five clouds on average. The percentage of enterprises with a strategy to use multiple clouds is 84%[ii]and 56% of the organizations plan to increase the use of containers.[iii]

Hybrid cloud platforms that support a multi-cloud architecture will be the winning platform in the future, especially as more data is ingested at the edge with the transition to 5G and stored on-premises or in the cloud.

Lift, Sift and Shift Data and Applications for Swift Connect from the Edge to Multi-cloud

In order to win the impending hybrid cloud war, the following four elements must be in place:

- Lift: Ability to move/process data and applications all the way from the edge to the enterprise or to a multi-cloud environment and migrate workloads efficiently

- Sift: Automate the current, tedious semi-manual, error-prone processes used to cleanse and prepare data, remove bias, prioritize it for analysis, and provide clear traceability

- Shift: Ability to move compute to where the data resides to minimize data movement costs and improve performance

- Swift: Multi-directionally execute all the above operations at scale with agility, flexibility and high-performance so that data can move between public, private and hybrid clouds, on-premises and edge installations, and can be updated as needed

- Connect: Seamlessly connect the edge, on-premise and multi-cloud implementations to a cohesive and agile environment with a single dashboard for centralized observation across all platform entities.

Figure 2 depicts this hybrid cloud platform which empowers customers to experiment with and choose the programming languages, tools, algorithms and infrastructure to build data pipelines, train and productionize analytics/AI models in a governed way for the enterprise and share insights throughout the organization from the edge to the cloud.

Figure 2: An Agile Hybrid Cloud Platform for Analytics/AI that Scales from the Edge to Multi-cloud

To win the hybrid cloud war, the platform must scale, be compliant, resilient and agile, and support open standards for interoperability. This gives clients the flexibility to adapt quickly to changing business needs and to choose the best components from multiple providers in the ecosystem.

The IBM Hybrid Multi-cloud Vision and Key Think 2020 Announcements

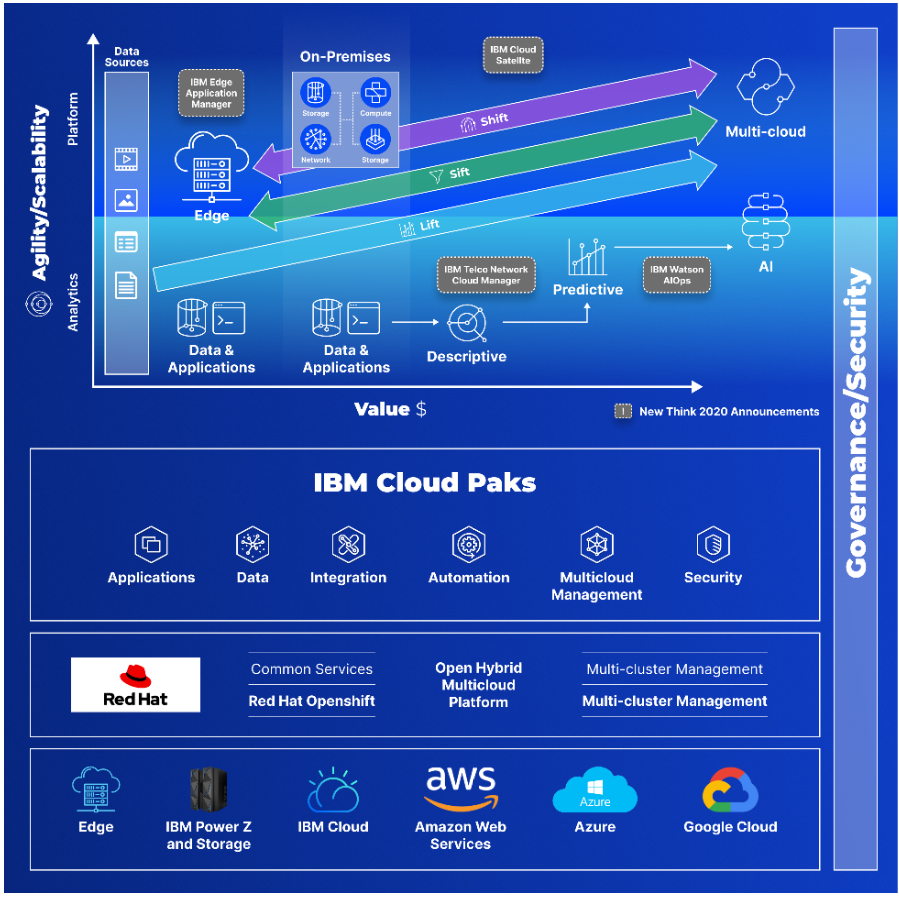

With the acquisition of Red Hat, IBM has laid the foundation to win the hybrid cloud war by enabling clients to avoid the pitfalls of single-vendor reliance. Clients can scale workloads across multiple systems and cloud vendors with increased agility through containers and unify the entire infrastructure from the edge to the data center to the cloud (Figure 3).

Red Hat provides open source technologies to bring a consistent foundation from the edge to on-premises or to any cloud deployment: public, private, hybrid, or multi:

- Red Hat OpenShift is a complete container application platform built on Kubernetes – an open source platform that automates Linux container operations and management

- Red Hat Enterprise Linux and Red Hat OpenShift bring more security to every container and better consistency across environments.

- Red Hat Cloud Suite combines a container-based development platform, private infrastructure, public cloud interoperability, and a common management framework into a single, easily deployed solution for clients who need a cloud and a container platform.

Figure 3: IBM Hybrid Multi-cloud Vision and Key New Think 2020 Announcements

For decades, IBM’s core competency has been as a trusted technology provider for enterprise customers running mission critical applications. IBM delivers enterprise systems, software, network and services. Key hybrid cloud offerings include:

- IBM Cloud Paks (Figure 3) are enterprise-ready, containerized services that give clients an open, faster and more secure way to move core business applications to any cloud. Each of the six IBM Cloud Paks includes containerized IBM middleware and common cloud services for development and management, on top of a common integration layer and runs wherever Red Hat OpenShift

- IBM Cloud is built on open standards, with a choice of many cloud models: public, dedicated, private and managed, so clients can run the right workload on the right cloud model without vendor lock-in.

- IBM Systems deliver reliable, flexible and secure compute, storage and operating systems solutions.

- IBM Services help organizations by bringing deep industry expertise to accelerate their cloud journeys and modernize their environments.

Reinforcing the strength of the existing portfolio of products and offerings, IBM launched several AI and hybrid cloud offerings backed by a broad ecosystem of partners to help enterprises and telecommunications companies speed their transition to edge computing in the 5G era. (Figure 3):

- IBM Cloud Satellite gives the customer the ability to use IBM Cloud services anywhere — on IBM Cloud, on premises or at the edge — delivered as-a-service from a single pane of glass controlled through the public cloud. IBM Cloud Satellite specifically extends the IBM Public Cloud with a generalized IaaS and PaaS environment, including support for cloud native apps and DevOps, while providing access to IBM Public Cloud Services in the location that works best for individual solutions.

- IBM Watson AIOps uses AI to automate how enterprises self-detect, diagnose and respond to IT anomalies in real time to better predict and shape future outcomes, focus resources on higher-value work and build more responsive and intelligent networks that can stay up and running longer.

- IBM Edge Application Manager is an autonomous management solution designed to enable AI, analytics and IoT enterprise workloads to be deployed and remotely managed, delivering real-time analysis and insight at scale. The solution enables the management of up to 10,000 edge nodes simultaneously by a single administrator.

- IBM Telco Network Cloud Manager runs on Red Hat OpenShift, to deliver intelligent automation capabilities to orchestrate virtual and container network functions in minutes. Service providers will have the ability to manage workloads on both Red Hat OpenShift and on the Red Hat OpenStack Platform, which will be critical as telcos increasingly look for ways to modernize their networks for greater agility and efficiency, and to provide new services today and as 5G adoption expands.

With the Hybrid Cloud Strategy in Place, the Focus is on Execution

The new product announcements and initiatives launched during IBM’s 2020 Think Digital event reinforce IBM’s intent – even during this pandemic – to march full steam ahead to execute on its hybrid multi-cloud vision: Any application can run anywhere on any platform, at scale, wherever data resides, with resilience, agility and interoperability across all clouds on an open, secure and governed enterprise-grade environment.

IBM has also issued the Call for Code challenge to address the current pandemic. This global challenge encourages innovators to create practical, effective, and high-quality applications based on one or more IBM Cloud services (for example, web, mobile, data, analytics, AI, IoT, or weather) that can have an immediate and lasting impact on humanitarian issues. Teams of developers, data scientists, designers, business analysts, subject matter experts and more are challenged to build solutions to mitigate the impact of COVID-19 and climate change.

With this compelling hybrid strategy and continuing focus of collaboratively solving challenging problems, we believe IBM is well-positioned to execute to win the hybrid cloud war because:

- A strong technical, business and sales savvy leadership is in place with Arvind Krishna and Jim Whitehurst (President of IBM).

- This pragmatic strategy builds on IBM’s traditional strengths of serving enterprise customers on their cloud journey by providing the much-needed technologies and momentum for modernization.

- In addition to IBM Research, the autonomy that Red Hat enjoys will ensure its entrepreneurial growth culture will continue to be a lightning rod for IBM innovation.

- With the focus on an open platform, clients and the partner ecosystem will have the assurance to co-create high-value offerings and services to meet future challenges.

Last and perhaps most important, the technology industry is constantly disrupting, with new billion-dollar businesses emerging rapidly. This century’s first decade witnessed the rise of social media, mobile and cloud computing. As keen observers of IBM both from the inside and outside, we believe this is probably the first time in recent decades that IBM is endowed with a technology and business savvy leadership team that has a track-record of rapidly growing large billion-dollar businesses. As the cloud wars rage in the next decade, there will undoubtedly be many disruptions. Perhaps now more than at any time in the recent past, IBM will not only spot these opportunities but also boldly act to galvanize its incredible human resources and its vast ecosystem to build these next generation hybrid cloud and AI businesses.

[1] Nagendra Bommadevara, Andrea Del Miglio, and Steve Jansen, “Cloud adoption to accelerate IT modernization”, McKinsey & Company, 2018

[1] https://www.ibm.com/downloads/cas/V93QE3QG

[1] RightScale STATE OF THE CLOUD REPORT 2019 from Flexera

Cabot Partners is a collaborative consultancy and an independent IT analyst firm. We specialize in advising technology companies and their clients on how to build and grow a customer base, how to achieve desired revenue and profitability results, and how to make effective use of emerging technologies including HPC, Cloud Computing, Analytics and Artificial Intelligence/Machine Learning. To find out more, please go to www.cabotpartners.com.

Copyright® 2020. Cabot Partners Group. Inc. All rights reserved. Other companies’ product names, trademarks, or service marks are used herein for identification only and belong to their respective owner. All images and supporting data were obtained from IBM or from public sources. The information and product recommendations made by the Cabot Partners Group are based upon public information and sources and may also include personal opinions of both Cabot Partners Group and others, all of which we believe to be accurate and reliable. However, as market conditions change and not within our control, the information and recommendations are made without warranty of any kind. The Cabot Partners Group, Inc. assumes no responsibility or liability for any damages whatsoever (including incidental, consequential or otherwise), caused by your or your client’s use of, or reliance upon, the information and recommendations presented herein, nor for any inadvertent errors which may appear in this blog. This blog was developed with IBM funding. Although the blog may utilize publicly available material from various vendors, including IBM, it does not necessarily reflect the positions of such vendors on the issues addressed in this document.

Published by: Srini Chari