Nutanix Conference on Hybrid-Cloud Management

Harmonizing Hybrid-Cloud Environments

By Jean S. Bozman, Cloud Architects LLC

Customers across all regions of the world are facing a turning point in their IT landscapes. The world of cloud computing is, quite literally, colliding with the world of traditional IT.

The complexity that customers see in these mixed environments must be resolved, or business processing will slow – affecting the dynamics of their entire business.

It is time for introspection for IT executives and business executives – two groups that are looking closely at the infrastructure that’s already installed, evaluating the costs of operation – and planning for decision-making about the way forward.

Harmonizing Hybrid-Cloud Environments

The megatrends are clear: Across the board, most of the world’s hardware and software suppliers are driving strong support for hybrid cloud and multi-cloud deployments. Yet, a very big obstacle remains: the hyperscale world of cloud infrastructure must “harmonize” with a constellation of IT data resources that were originally designed for an earlier era.

Customers are already working to bring the hyperscale and traditional IT worlds together. But that goal isn’t easy, or quick, to accomplish.

Here’s why:

- Applications must be updated to support open standards, containers and microservices – and new ones written for the new environments.

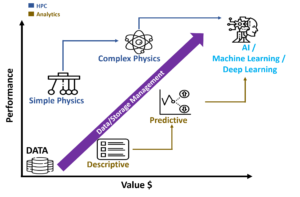

- The rapid rise of GenAI, AI/ML training and AI inferencing requires that end-to-end computing must work better, more smoothly, and more consistently, across a customers’ entire IT landscape. Inconsistent data feeding AI models will skew – or ruin – AI-based data results.

- Data must be optimized, in both format and storage, to improve application performance, AI results, and to reduce system latency. Objects and unstructured data – estimated to be 80 percent of all data — must be managed alongside the structured data that is the “meat-and-potatoes” foundation of transactional IT systems for banks, manufacturing, and retail companies.

- Importantly, aging networking systems linking end-to-end hardware/software infrastructure must now be updated support generations of equipment – and to do so with greater security and cyber-resiliency

Nutanix’ Strategy for a Unified IT Environment

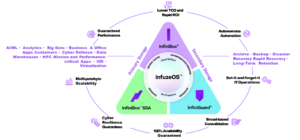

Nutanix presented its broad strategy for forging a more unified, consistent IT landscape at its .NEXT conference, which it hosted in Barcelona, Spain, this month. The company added to its robust strategy of enabling software-defined systems and hyperconverged systems – and of managing hundreds of distributed systems across the hybrid cloud, using the company’s well-known control plane (NCP) and dashboards to do so.

Consolidate and Simplify

Many Nutanix customers are working to consolidate and simplify their firm’s three-tier infrastructure by using Nutanix software and hyperconverged-based systems to manage workloads across their entire IT landscape.

The company plans to play a key role in customers’ process of hardware modernization and cloud migrations to public, private or hybrid clouds. Nutanix was clear about supporting hyperconverged infrastructure – its traditional role – while reaching out to more traditional hardware accounts, with many existing hardware “footprints” in their data centers.

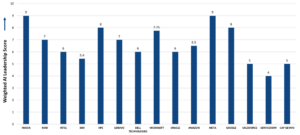

Throughout the .NEXT conference, Nutanix showed that its overall strategy is aimed at broadening the company’s customer base worldwide, through support of open-software standards (e.g., CNCF for open programming), support across storage types (files, blocks and objects), and support for hardware partners (Dell Technologies, Cisco UCS, Supermicro), and a range of processor types (e.g., NVIDIA GPUs, AMD EPYC processors, Intel x86 processors).

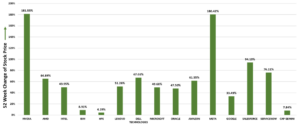

Very often, the theme at this conference was about reaching more customers – and addressing a wider range of use-cases for software-defined and hyperconverged systems in the user base. The $2 billion company has seen steady growth, and it is looking to accelerate that growth through support for AI/ML – the rapidly growing market in the IT industry that has captured customers’ mind-share and worldwide attention.

Two of the largest customers that spoke on-stage at the .NEXT conference were the Wells Fargo bank, a large financial institution; and John Deere, which makes tractors and agricultural equipment. Both companies have substantial Nutanix deployments supporting application modernization and business transformation. They are strong examples of Nutanix software deployed in well-known, large companies that are leveraging the software, and realizing business benefits.

The Hybrid Cloud and the Enterprise Data Center

Expanding its wide support of open software standards is another path to grow its customer base among enterprise customers, CSPs, and MSPs across many geographic regions in Europe (EMEA), the Americas, and across Asia/Pacific. Although customers and service providers are seen as two very different groups – they are both facing similar decisions regarding spending, budgets and controlling operational costs for 2025. The very largest CSPs – AWS, Microsoft Azure, and Google Cloud Platform (GCP) — often customize their own management software. But most CSPs and MSPs acquire cloud-management software from ISVs and SIs, and they buy much of it from third-party software providers, including Nutanix, Veeam, Veritas, and VMware.

Tapping Sources of Revenue Growth

For Nutanix, other avenues for generating revenue growth include stronger ties with large tech companies that have already made strong inroads in enterprise and CSP/MSP accounts – and that can provide access to large installed bases.

These large partners include NVIDIA, which is seeing rapid growth with its GPUs for AI LLMs (large-language-models) and AI inferencing; across many industrial sectors; AMD, with its EPYC processors for HPC and high-performance applications; Dell Technologies, Inc. with its widely installed PowerEdge servers); Cisco, with its worldwide base of UCS blade servers; and Supermicro Computers Inc. with its rack-ready systems for rapid expansion of compute and storage in enterprise data-centers, and in cloud provider (CSP and MSP) infrastructure.

Highlights of the Nutanix .NEXT announcements include:

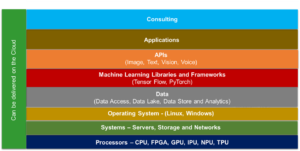

- Nutanix Kubernetes® Platform (NKP). NKP is designed to simplify management of container-based modern applications using Kubernetes. NKP enables a complete, CNCF-compliant cloud-native stack that provides a consistent operating model for securely managing Kubernetes (K8s) clusters across on-premises, hybrid, and multi-cloud environments. NKP supports block, file and object storage – all three major storage formats – and databases-as-a-service for customers’ IaaS deployments.

- Extending Nutanix AHV Use. Nutanix introduced a range of new Nutanix AHV enterprise features and deployment options designed to meet the demands of large-scale enterprise environments. This includes support for compute-only servers in addition to Nutanix’ well-known support for hyperconverged servers. It is intended to accelerate Nutanix use in large enterprise accounts. The AHV features support customers’ virtualization and containerization development efforts.

- Outreach to Enterprise Accounts. Nutanix was clear about its interest in gaining more enterprise customers through the use of AHV partnerships. “We are excited to work with our partners to expand the reach of Nutanix AHV [hypervisors] to compute-only servers beyond traditional hyperconverged servers, further accelerating its adoption by enterprise customers to simplify operations and increase cyber-resilience,” said Thomas Cornely, SVP of Product Management at Nutanix.

- Partnership with NVIDIA. Nutanix and NVIDIA agreed to integrate NVIDIA’s NIM inference microservices with Nutanix’ GPT-in-a-Box 2.0 software. Based on an interoperable API, the connection between NVIDIA’s NIM and Nutanix’ GPT-in-a-Box 2.0 software simplifies and supports deployment of scalable, secure and high-performance GenAI applications that will run across customer landscapes, including Core, Cloud and Edge infrastructure. For AI workloads, the Nutanix solution includes integrations with NVIDIA NIM inference microservices and Hugging Face Large Language Models (LLMs) library. Nutanix GPT-in-a-Box 2.0 is a full-stack solution that simplifies Enterprise AI adoption through tight integration with the Nutanix Objects Storage and Nutanix Files Storage offerings for model and data storage. It is another example of Nutanix working to pull together support for customers’ objects and files, which have often been stored separately.

- Partnership with Dell Technologies. Nutanix and Dell Technologies agreed to a offer a turnkey, hyperconverged appliance, shipping Nutanix Cloud Platform software on Dell’s PowerEdge servers. Dell has also said it will ship an “appliance” combining the Dell PowerEdge servers with Nutanix software. The Nutanix AHV platform allows customers to scale compute and storage independently, providing flexible scalability to meet rising workload demand. Nutanix Cloud Platform (NCP) for Dell PowerFlex, leveraging Nutanix’ AHV hypervisor software, combines the Nutanix Cloud Platform for compute with Dell’s PowerFlex for storage.

- Partnership with Cisco. Nutanix announced that it is working with Cisco to certify Cisco UCS blade servers, on a worldwide basis. Introduced 20 years ago, UCS blade servers providing a large customer base for installing Nutanix AHV hypervisor software on compute-only nodes. Nutanix and Cisco have said the goal of this joint project is to expand deployments options, including repurposing already-deployed server hardware, including the Cisco UCS blade servers, through the use of Nutanix AHV virtualization software.

- Power Monitoring and Sustainability. As customers update data centers, outfitting them for improved power/cooling capabilities, monitoring rea;-time changes in power consumption will become critical to daily operations. In line with ESG and Sustainability programs in customer sites, Nutanix is adding improved visibility of power consumption in Nutanix environments. The capabilities, integrated into the Nutanix Cloud Infrastructure (NCI) software stack, are expected to streamline energy monitoring, highlighting detailed data associated with specific workloads, supporting decisions to adjust power levels.

Summary

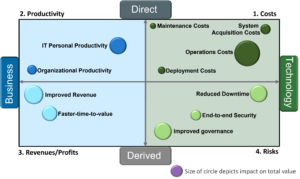

Nutanix presented a clear view of its strategy for 2024-2025, showing that it has multiple paths to build its base of enterprise customers and CSPs/MSPs that are building hybrid-cloud infrastructure. Nutanix’ aim is to address customers’ ongoing operational costs by supporting consolidation of systems in the datacenter, and by monitoring and managing power/cooling costs for installed computer and storage systems.

At its .NEXT conference, the company presented a compelling and cogent case for leveraging the power of hybrid-cloud and multi-cloud infrastructure. Certainly, that is the direction that most of the world’s major hardware and software companies are taking, based on their announcements over the course of 2024.

Hybrid cloud deployments, combined with customers’ strong interest in AI and GenAI, are boosting growth in the tech marketplace for the vendors that provide the tools and management software that helps customers move into a modern end-to-end IT infrastructure.

We will soon see the results of the company’s well-considered strategy of investing in – and expanding — its tech partnerships worldwide. The company’s strategy, combined with a strong portfolio of new features in Nutanix’ software, should pay off with increased revenues in CY2025